Prisoner’s Dilemma

The Prisoner’s Dilemma is one of the most cited examples in the field of game theory, and in the academic studies of economics and international relations. In addition, the prisoner’s dilemma has been used to help understand economic, politics, elections, crime, as well as behavior in business, and also behavior among individuals in other settings.

In this article, we shall explain the prisoner’s dilemma, extensions of the game, as well as applications from it in many academic fields. We will also discuss ways to “beat” the prisoner’s dilemma (reasons why someone might be less likely to defect)), as well as examine a recent experiment of the prisoner’s dilemma carried out by academics.

What is the Prisoner’s Dilemma?

The Prisoner’s Dilemma is one type of game which is understood as a two-person game (or an N-game) where the outcome of the game (or interaction) depends on the decisions of both actors. This is one type of interaction within a broader field understood as Game Theory. Game Theory examines these sorts of interactions between actors. In each game, the choices that one has is a strategy. As Zagare (writes), “strategies come in two types, pure and mixed. A pure strategy is a complete contingency plan that specifies a choice for a player for every choice that might arise in the game. A mixed strategy involves the use of a particular probability distribution to select one pure strategy from among a subset of a player’s purse strategies” (62).

In the game (or interaction), every decision a player makes is done on the bases of what they believe is their best interest. The reason is because each actor is a rational actor; they are doing what they believe is best for them. Prisoner’s Dilemma is not any different. It is a game with specific decision options for each actor. In this case, the prisoner’s dilemma is used to help us understand cooperation between two individuals.

Prisoner’s Dilemma Explained

The backstory to the game is as follows: The Prisoner’s Dilemma is a situation where two individuals are both arrested for a crime. However, while they were arrested for a crime, there is a belief that they committed another crime (that carries with it a much harsher penalty). But the police cannot prove that the two individuals committed the much more serious crime (although they do have enough evidence for the lesser crime). The authorities are planning on questing the two individuals to see if they are going to tell on one other for the more serious crime. Now, the two individuals are separated from one another, so they are unable to communicate with one another. Each of the prisoner’s has two options: they can admit to committing the crime, or they can deny committing a crime.

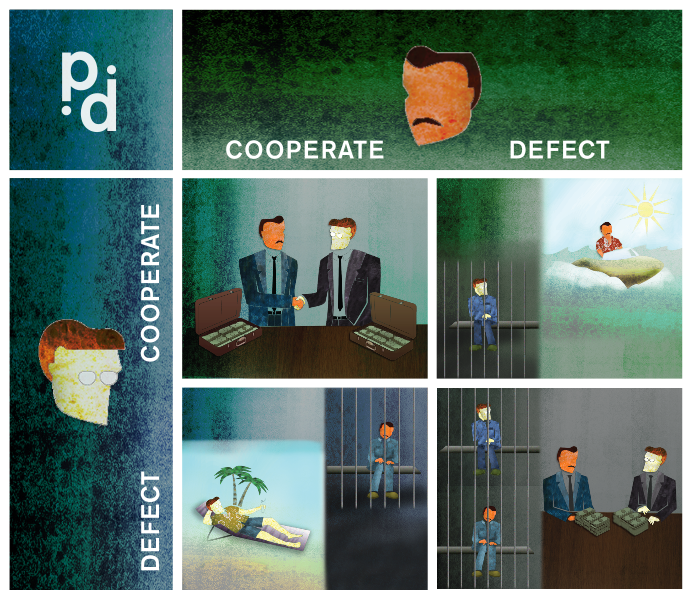

The outcome will depend on the choices each individual makes. Below is an example of what the outcomes will be for each prisoner depending on the four possible outcomes.

So, Player Green (or Player 1) has two options (cooperate with one another or defect (and tell on the other)). Player Blue (or Player 2) has the same two options. S/he can cooperate with her/his partner, or s/he can defect and tell on the other. Authorities allow them to defect for a reduced penalty (in this case, no penalty). The outcomes of each player’s decisions are on a scale of 0-5. If one player defects (tells on the other), and the other does not, then the one who tells (defects) will get immunity, whereas the one who continue to cooperate with their partner will get the harshest penalty. The same exact outcomes go for Player 2. If both cooperate with one another (that is, neither tells on each other), then they would each get a penalty of 1, which is each of their second best outcome. However, the best outcome for Player Green (Player 1) is to defect, as it is for Player Blue (Player 2). But when this happens, both of them get a penalty of 3 each.

So, in rational choice theory, if each player acts rationally (what is best for them), the outcome for each will be determined: both will tell (defect). The reason is because “No matter what one guy does, the other guy is better off ratting him out. Yet if each player works for his own self interest, both are made worse off. It’s a frustrating problem. Game theorists call it a Nash equilibrium—one in which nobody can improve their position by unilateral move. It takes trust” (Satell, 2015). As it is explained, “The unique equilibrium for this game is a Pareto-suboptimal solution—that is, rational choice leads the two players to both play defect even though each player’s individual reward would be greater if they both played cooperate. In equilibrium, each prisoner chooses to defect even though both would be better off by cooperating, hence the “dilemma” of the title” (New World Encyclopedia, 2015).

Here is a video also explaining the prisoners dilemma (although the video uses different penalty numbers, the concept is the same).

How to Beat the Prisoner’s Dilemma

Scholars have pointed out different ways that two prisoners are able to beat the dilemma that they face, namely, the possibility of turning on one another and both ending up with longer prison sentences. One way for them to avoid the longer sentence is to play the prisoner’s dilemma game multiple times. If you only have one iteration, then there is no history to know how the other will act, which leaves one with no evidence that the other will cooperate. However, if you repeat the game, you can see what the other chose to do, and make the same choice. The question people ask and then have answered is the following:

Why would we expect to see cooperative play? When the PD game is being played repeatedly,my cooperation can be construed as a signal that I will continue to cooperate in future plays. Itestablishes a reputation that I will cooperate, and this may induce the other player to cooperateas well, in order not to lose my cooperation. Moreover, the same argument applies to him: hemay cooperate on the current play for the same reason I’m considering cooperation — in order toestablish his own reputation, hoping it will induce me to cooperate in the future” (Walker, ND).

Thus, if the other player makes a decision to cooperate, you can make the same decision. If the person does not cooperate, then on the next iteration of the game, you have the ability to “punish” the individual who turned you in the previous game (Satell, 2015). This has also been called a “tit-for-tat” strategy (Walker, ND).

But instead of using punishment as a mechanism for trust building, other suggestions have been raised. For example, “A more viable strategy that is emerging for leaders is to network their organization by creating personal relationships among people from disparate groups through embedding, combined training, liaison programs and promotion policies. McChrystal used all of these to create trust among his high performing groups. Further, research into to networks finds that it takes relatively few connections to drastically reduce social distance, so a networking strategy is viable even for large organizations. Even if two people have never met, a mutual relationship with a trusted third party can help close the gap quickly” (Satell, 2015).

In addition, the social institutions in place could also affect a person’s willingness to defect (Yvain, 2012). Say a person is worried about their reputation in their family, community, or greater society. Even though defecting will get them less time in jail than if they cooperated and the other player defected, the social institutions in place might be a greater punishment to them then additional jail time.

Testing the Prisoner’s Dilemma

While the Prisoner’s Dilemma is a highly cited example in international relations theory, it is also important to examine whether the outcomes would arise in the physical world. Again, rationally, ever player should defect in order to receive their best outcome. But, other factors might drive them to cooperate, even in a one time iteration of the game.

For example, two scholars for the University of Hamburg decided to test the Prisoner’s Dilemma on student subjects, as well as on prisoners. The experiment differed from the examples of the prisoner’s dilemma in the literature, where, instead of prison sentences, the subjects are given rewards, either money (for the students), or cigarettes and coffee (Nisen, 2013). Given what rational choice says about motivations, it was excepted that many would defect. What they found was interesting: “only 37% of students cooperated. Inmates cooperated 56% of the time. On a pair basis, only 13% of student pairs managed to get the best mutual outcome and cooperate, whereas 30% of prisoners do. In the sequential game, far more students (63%) cooperate, so the mutual cooperation rate skyrockets to 39%. For prisoners, it remains about the same” (Nisen, 2013).

It is interesting to think about the reasons that these outcomes might have been related to. On the one hand, it is possible that people are not so distrusting of others. However, the outcome to this prisoner’s dilemma experiment could also be because the stakes were not as high, or because of other motivations not captured in the study.

Prisoner’s Dilemma References

New World Encyclopedia (2015). Prisoner’s Dilemma. 2 June 2015. Available Online: http://www.newworldencyclopedia.org/entry/Prisoner’s_dilemma

Nisen, M. (2013). They Finally Tested the ‘Prisoner’s Dilemma’ On Actual Prisoners–And The Results Were Not What You Would Expect. Business Insider. July 21, 2013. Available Online: http://www.businessinsider.com/prisoners-dilemma-in-real-life-2013-7

Satell, G. (2015). How to Build Trust, Even With Your Enemies. Forbes, August 8, 2015. Available Online: http://www.forbes.com/sites/gregsatell/2015/08/08/how-to-build-trust-even-with-your-enemies/#23cb755e5e84

Walker, (ND). Repetition and Reputation in the Prisoner’s Dilemma. Available Online: http://www.u.arizona.edu/~mwalker/10_GameTheory/RepeatedPrisonersDilemma.pdf

Yvain (2012). Real World Solutions to the Prisoner’s Dilemma. LessWrong. July 03, 2012. Available Online: http://lesswrong.com/lw/del/real_world_solutions_to_prisoners_dilemmas/

Zagare, F. C. (1986). Recent Advancements in Game Theory and Political Science. Chapter 3, pages 60-90. Available Online: https://www.acsu.buffalo.edu/~fczagare/Chapters/Recent.PDF